This essay surveys the recent research that has been conducted as part of the work at Noeon Research. The advancements in mathematical logic and category theory discussed below form part of Noeon Research's approach to building robust and interpretable general intelligence. Learn more: https://noeon.ai/.

Opinions expressed here are those of the author and should not be construed as opinions of Noeon Research.

Vladimir. I don’t understand.

Estragon. Use your intelligence, can’t you?

Vladimir uses his intelligence.

Vladimir (finally). I remain in the dark.

— Samuel Beckett, Waiting for Godot

Foreword

My aim is to give an alternative perspective on the foundations of knowledge representation. Knowledge representation is often thought of as a branch of artificial intelligence. However, I believe that any reflection on such topics contains too many simplifications if it overlooks the foundational aspects of ontology, logic and language. I endeavour to fill those gaps, so the question I pose is what it means to provide a language for knowledge representation. In this essay, I will emphasise those aspects of it that have remained overlooked in my view. Currently, the study of knowledge and ontology representation is left to the discretion of applied computer scientists and engineers, but their perspective, focused on practical solutions, can be extensively supplemented with a reflection on the foundations.

I believe that the current development of ontology and knowledge representation should revise its trajectory and transform itself into a multidisciplinary study that involves the foundations of mathematics, philosophical ontology and epistemology. Such synthesis might be more prospective in terms of being able to consider more complex assumptions on the nature of knowledge and cognition in the process of computational modelling. For this reason, the essay builds a view on the foundations of knowledge representation by developing the perspective on (meta)mathematics and the relevant topics in philosophy, thereby placing the mathematical contribution within the broader context. Along with that, I share some concerns regarding the current state of affairs in the industry, potentially precluding qualitative shifts in knowledge representation.

The reader should think of this essay as an overview of the philosophical conceptual field of ideas that might form a body of new directions in knowledge representation. The essay also places my paper, Term Assignment and Categorical Models for Intuitionistic Linear Logic with Subexponentials, in the context of the conceptual field. However, this essay does not suggest any ready-made practical solutions, but formulates the necessity of a deeper reflection in expanded form. This is why I prefer a freer form of a philosophical and programmatic statement of intent.

In terms of creating concepts in mathematics, the aforementioned paper has already introduced the concept of a Cocteau category along with a particular refinement of linear logic. In terms of creating concepts in philosophy, the essay suggests a project of procedural knowledge representation based on the mathematical contribution along with the foundational reflection. As I have said, I would leave developing the actual architecture to colleagues specialising in computer science, but I pick up the prism for a philosophical analysis. The reader should also bear in mind that the claims I pose are subject to further discussion and deeper research; therefore, I encourage the reader to draw their conclusions. This is why the essay includes quite a few topics, prima facie having nothing in common with one another. Still, I hope that, over the course of the essay, I was able to explain to the reader how philosophy and the foundations of mathematics form the ground for theoretical knowledge representation.

§1 From language towards logic

Mathematical logic is an endeavour to address ultimate questions such as ‘what is theory?’, ‘what is a model of a theory?’ or ‘what is truth?’ directly related to the foundations of pure mathematics by purely mathematical means. In this sense, mathematical logic is a formal rigorisation of the philosophy of mathematics. Motivating a particular contribution to mathematical logic, such as mine, presumes there is a vision of the big picture of logic. Logic, as the foundation of mathematics, studies what makes concepts created by other mathematicians valid and what validity is per se. All of the known approaches to the foundations — from Principia Mathematica to Homotopy Type Theory — have a common attribute: they suggest a language allowing one to write down mathematical constructions and proofs formally, that is, transparently and without appealing to psychological persuasiveness. Logic is not only about the foundations of mathematics, it also covers computability theory, formal grammars, computational complexity and model theory. And in its every particular hypostasis, logic comes along with these or those references to the notion of a language.

Let me provide the perspective on the question of what makes logic logic. I mark out the line between logic and language since a lack of such distinction might lead to perplexing puzzlement: terminological confusions might lead to various misconceptions. I find it relevant to provide a bird’s-eye view of the dimensions the concept of language encompasses and from what angles language has been studied in linguistics.

Linguistics classifies grammatical structures, morphological patterns of expressions and the correlations between speech and neural activity. Philosophy of language studies somewhat similar problems, but at higher levels of generality. Many branches of philosophy of language maintain a Wittgenstein-inspired direction aligned with linguistics, which resulted in a family of approaches to, for example, speech acts, proper names and analysing the aspects of meaning through use cases. (Post)structuralist French-speaking philosophy had experienced its linguistic turn as well. Such concepts as deconstruction are rooted in the criticism of essentialism, making the concepts of meaning and sense more fluid and context-dependent. If English-speaking specialists in philosophy of language (and its derivatives such as philosophy of consciousness) often position their exercises as science-based to a certain degree, continental authors would rather provide a framework of how one can critically analyse language and speech through their manifestations in culture, art, politics and social practices. As Roland Barthes noted in his Elements of Semiology:

A language is therefore … is at the same time a social institution and a system of values. As a social institution, it is by no means an act, and it is not subject to any premeditation. It is the social part of language, the individual cannot by himself either create or modify it; it is essentially a collective contract which one must accept in its entirety if one wishes to communicate. … As a system of values, a language is made of a certain number of elements, each one of which is at the same time the equivalent of a given quantity of things and a term of a larger function, in which are found, in a differential order, other correlative values.

Linguists often study natural languages in the same sense as other scientists study their fields. Languages for linguists are objects coming from the external world, which are yet to be classified and explained in every particular aspect. The notions of syntax and semantics in linguistics are purely descriptive as they depict what grammatical patterns one can observe and how one can interpret these or those phrases, and under what circumstances. The concepts of syntax and semantics in logic share certain common features with syntax and semantics in linguistics, but the similarities are rather superficial.

Logic also develops artificial languages to present statements, proofs or, more generally, patterns of deductive reasoning as mathematical objects. Although the matters of semantics originally come from linguistics, logicians also tackle the semantic questions of meaning in a sense not so close to their natural language counterparts. In both logic and linguistics, there are interconnections between expressions and statements with entities and relations between them (such interconnections are often described in analytic philosophy with the concept of reference). However, in logic, one expects that such interconnections are aware of provability arising from axioms and laws of deductive inference. That is, anything provable syntactically should be uniformly reflected in the ontological assumptions to achieve consistency. This requirement makes semantics in logic more rigid and fixed compared to theoretical linguistics: the field of linguistics has always been beyond the matters of provability and consistency.

I would further discuss how one can distinguish logic and language (as it is studied in linguistics) and determine the field of logic. There is a common belief that logic is a prescriptive discipline describing the universal laws of ‘correct’ thought. For example, this is how Immanuel Kant, one of the many prominent authors sharing this vision, reproduced such an angle of view in his Critique of Pure Reason:

… [General logic] contains the absolutely necessary rules of thinking, without which no use of the understanding takes place, and it therefore concerns these rules without regard to the difference of the objects to which it may be directed. … General logic … abstracts from all contents of the cognition of the understanding and of the difference of its objects, and has to do with nothing but the mere form of thinking.

However, I am adamant that such treatment of logic is rather archaic. The development of mathematical logic, starting from Frege and Russell, provided the formalisation of classical logic, assumed to be the logic of a priori truth. Classical logic, in turn, has several restrictions, which make it not so sensitive to various aspects of formal reasoning. Logicians refine logical syntax and semantics to depict aspects of reasoning uncovered in classical logics because of its restrictions. And those limitations hinted at a landscape of alternative logical formalisms leading to the doctrine of logical pluralism. So it would be fallacious to claim that there is a single logical system to rule all of the principles of truth, but there is a variety of logics, unobservable, but only conceivable as a whole.

Logical pluralism makes the notion of logical truth contingent on factors such as the definition of truth in a specific logical system and the interpretation of logical connectives. Therefore, the meaning of a logical proposition is not its a priori essence, but the result of a particular interpretation of logical primitives afforded for usage at this moment under a currently chosen definition of meaning. The flexibility of meaning in natural language and logical pluralism are united in their rejection of apriorist essentialism, which seems to be an inherited delusion from earlier stages of human thought by inertia.

All in all, my vision is that mathematical logic covers two fields: metamathematics and the permanent classification of what kinds of deductive reasoning one can approximate by formal mathematical means. As metamathematics, logic analyses how mathematical theories can be arranged formally and, thus, attempts to justify pure mathematics as a creative discipline that invents concepts yet relies on rigorous proofs. But the more one modifies the notions of syntax and semantics, the more regions of reasoning one can discover. Therefore, as a classification, logic is a permanent process that has already enriched the landscape of logics with such directions as modal logic, fuzzy logic, linear logic, intuitionistic logic, paraconsistent logic, type theory, etc.

§2 On Proof Theory and Categorical Logic

As I have noticed above, logic is sensitive to the distinction between syntax and semantics. The language of logic allows us to represent statements by means of formal syntax, whereas semantics assigns value to every statement. In classical logic, for example, every statement is either true or false. Classical logic satisfies the so-called principle of bivalence introduced by Gottlob Frege in his pioneering Begriffsschrift and further popularised by Bertrand Russell and Ludwig Wittgenstein in mathematical logic and philosophy of language.

In the practice of ordinary speech, common sense says that proof is merely a tool to extract the truth. But logic distances itself from common sense as too restrictive and distinguishes provability and truth. The provability of a statement means it is syntactically derivable from axioms and inference rules, whereas truthfulness is the semantic meaning of a sentence that follows from the formal definition of how values are assigned to propositions. In the simplest case of classical propositional logic, the truth-related definitions are given with truth tables. But the further we move away from the Boolean-Fregean binary true/false opposition, the more complex the notion of meaning becomes. Moreover, the concept of truth itself is subject to permanent revision. More generally, semantics in logic interconnects provable statements and their meanings, that is, states of affairs satisfying them. On the side of syntax, proof is a finite sequence of formulas obtained by using axioms and inference rules, whereas meaning is assigned to statements by giving a mathematical definition of satisfaction that depends on a particular logic. For example, the meaning in classical propositional logic is represented with purely combinatorial truth tables, but every modification of syntax requires providing mathematical structures modelling states of affairs that make provable statements satisfied beyond their syntax.

As I have earlier noticed, there is no single logic, but a landscape of logics, so I focus on two segments of it: intuitionistic (or constructive) logic(s) and linear logic(s). Intuitionistic logic has a comprehensively discussed philosophical basis, whereas the corresponding metaprinciples of linear logic lack a similar conceptual reflection. To outline, in turn, the philosophical foundations of intuitionistic logic, I would emphasise a problematic point in classical mathematics that stimulated the development of intuitionism. Consider the following statement:

There are irrational numbers a and b such that aᵇ is rational.

There is a clever trick to prove it without raising irrational numbers to a power too much. One can take √2, then if (√2)⁽√²⁾ is rational, then this is it. Otherwise, one can take ((√2)⁽√²⁾)⁽√²⁾. In either case, we have a rational number. If the former alternative is the case, then we take a = b = √2. Otherwise, we take a = (√2)⁽√²⁾ and b = √2. This is a completely acceptable reasoning in classical mathematics, where mathematical entities are treated as objectively existing citizens of the Platonic world of ideas. In this setting, a mathematician’s super-objective is to describe the ontology of this world consistently. Therefore, the answer of existence in classical mathematics is binary. So classical logic, with its principle of bivalence, was designed to characterise patterns of reasoning correct in terms of the true/false opposition. Proving this statement by providing particular irrational numbers might deal with, for example, Liouville numbers or even referring to some rather non-trivial results in analytic number theory. Anyway, the constructive argument requires raising some particular number to some power more explicitly.

If the reader has a glance at that proof, they will see that it does not construct an explicit proof by providing two particular irrational numbers. Constructive mathematics suggests an alternative perspective. If classical mathematicians consider statements a priori either true or false, they aim to discover which is which. Constructive mathematicians consider theorems as tasks to be creatively solved. Thus, a proof is an explicit solution. This distinction is not merely a matter of façon de parler since following this framework implies that quite a few classically acceptable postulates, such as the law of excluded middle, are rejected. For instance, the proposition ‘either P = NP or P ≠ NP’ is classically true. The constructive interpretation would mean that one can explicitly determine whether P = NP or not, which would allow solving one of the Millennium Prize Problems. For a classical mathematician, it is a priori already known in the Platonic world which of the alternatives is the case. But a constructivist would rather refrain from apriorist speculations and would care about the proof itself and its intuitive clearness. This is why, in particular, this branch of logic and the foundations of mathematics is called intuitionism. As Michael Dummett, an Oxford philosopher and logician, remarked in his Elements of Intuitionism:

On an intuitionistic view, … the only thing which can make a mathematical statement true is a proof of the kind we can give: not, indeed, a proof in a formal system, but an intuitively acceptable proof, that is, a certain kind of mental construction. Thus, while, to a platonist, a mathematical theory relates to some external realm of abstract objects, to an intuitionist it relates to our own mental operations: mathematical objects themselves are mental constructions, that is, objects of thought not merely in the sense that they are thought about, but in the sense that, for them, esse est concipi1.

Turning to the semantics of intuitionistic logic, truth tables are no longer applicable. Thus, the question of meaning in constructive logics requires developing an alternative approach. As a heuristic, one can bear in mind the Brouwer-Heyting-Kolmogorov interpretation of logical connectives, but this interpretation is not really formalised; that is, it does not assign a value to every statement, but guides on how one can read connectives and quantifiers without appealing to Platonic truth. There is also Kripke semantics of intuitionistic logic and its generalisations, but I proceed with categorical semantics directly as more reflecting the core motivation of intuitionism.

Firstly, I am going to depict how category theory shifts the perception of mathematics. In mainstream mathematics, one studies structures such as groups, fields or topological spaces. All those structures are sets (of numbers, polynomials, points) with pieces of extra data such as operations (addition, multiplication, etc), relations (less than or equal to, being parallel, etc) or even subsets of elements (such as open sets). Ultimately, algebra, for instance, studies sets with operations, i.e. algebraic structures. Alongside sets with an extra structure, there are functions between them – not arbitrary ones, but those that preserve the underlying structure, such as continuous functions in real analysis and topology. Category goes further and therefore expands the landscape. Back in the 1940s, Samuel Eilenberg and Saunders Mac Lane noticed that structure-preserving functions have many common properties and introduced a more general language to depict those invariants, which in turn inspired new developments in algebraic topology, algebraic geometry and logic. This categorical language has already been woven into the fabric of logic quite integrally. For instance, there are categorical generalisations of model theory, computability theory and even Cohen’s proof of the Continuum Hypothesis Independence.

In logic, we prove statements using premises and conditions that imply the goal statement:

The latter is read as ‘a proposition A is provable from A₁,…, Aₙ. Semantics attributes some mathematical object to every correct proof, that is, to every proof derived by using rules of, say, intuitionistic logic. In classical (propositional) logic, we would rather assign all truth values to A₁,…, Aₙ and ensure that A is true whenever all the assumptions are true. But, constructively, every statement is a task to be solved, so this provability is read as ‘a problem A has a solution provided that A₁, …, Aₙ have already been solved’ or ‘there is a function f that takes a tuple (a₁, ..., aₙ) where a₁ solves A₁, … , aₙ solves Aₙ and returns f(a₁,…,aₙ) that solves A’. Truth tables are used for the simplest case of classical propositional logic, but they do not depict this intuition. Whereas the categorical concept of morphism reflects the idea of provability as an actual ability to solve the corresponding problem.

We interpret constructive proofs in Cartesian closed categories. Cartesian-ness enables modelling the constructive conjunction with finite products, the concept generalising the Cartesian product. That is, one could say let k₁ be a field of characteristic p and let k₂ be an algebraically closed field. In this semantics, a justification of this assumption would be a two-component data, the first projection of which shows that the sum of 1 p times is equal to 0. The second projection proves that any polynomial with coefficients from k₂ has a root in k₂. Thus, one interprets such conjunctive statements as direct products with projection arrows, allowing the extraction of each component. Closedness admits conditional statements such as ‘if k is algebraically closed, then every polynomial with coefficients in k splits into linear factors’. A constructive proof of such a statement is a function that takes a proof that k is algebraically closed and returns a proof of whatever polynomial from k[X] one takes, it is splittable. Constructive exponentials are reflected by so-called exponential objects having the same formal properties as implication in intuitionistic logic. Note that the more one expands the syntax of the constructive formalism with extra affordances, the richer structures one obtains, such as Grothendieck topoi. Olivia Caramello’s Theories, Sites and Toposes and Introduction to Higher-Order Categorical Logic by Joachim Lambek and Philip Scott are comprehensive guides on the area.

Type theory, in turn, connects constructive proofs and computation by making the BHK-interpretation more explicit via the widely discussed Curry-Howard Isomorphism. There are plenty of nuances to discuss indeed, but, roughly, one summarises the Curry-Howard Isomorphism as a combination of two principles: ‘propositions-as-types’ and ‘proofs-as-programs’. Recall that any proof is an explicit method solving a problem, so one can concretise this heuristic by supporting every formula with its method encoding its proof. Importantly, the language of proofs itself is based on the lambda calculus. This is why the principle is called ‘proofs-as-programs’: building a constructive proof becomes a computational procedure. We also think of formulas as data types since we attribute proofs to formulas in the same way as we assign programs to data types in (functional) programming languages. And the role of category theory is not only to determine the meaning of constructive proofs, but also to concretise the notion of a program. Indeed, informally, one can think of a program that consumes some data and returns an output, but the notion of a categorical morphism actualises this intuition rigorously. More generally, type-theoretic formalisms are associated with their categorical semantic models through the concept of an internal language: well-typed procedures formally presented in the underlying theory play the role of a syntactic description of morphisms one can construct with provided affordances.

§3 Linear Logic and Cocteau categories

Linear logic generalises both intuitionistic and classical logics. As I have already discussed, the meaning of a statement in classical logic is understood in terms of truthfulness and falsity, whereas the meaning of a proposition in intuitionistic logic is an explicit procedure solving a particular task. Linear logic, introduced by Jean-Yves Girard back in 1987, goes further and suggests interpreting the meaning in terms of resources and broadens the understanding of the concept of a constructive proof. As Girard noted in one of his works:

Classical and intuitionistic logics deal with stable truths: if

AandA → B, thenB, butAstill holds. This is perfect in mathematics, but wrong in real life, since real implication is causal. A causal implication cannot be iterated since the conditions are modified after its use; this process of modification of the premises (conditions) is known in physics as reaction.

One can illustrate the idea with a toy example. Assume you have £7, but whenever you have such an amount of money, you can buy a pint of ale at a local pub. But when you spend £7, you perform an action making your bank account balance less, and this is generally not invertible as a result of the already performed action. Thus, the interpretation of logical formulas as actions relying on resource consumption requires revisiting both syntax and semantics quite deeply. Although the motivation of linear logic is not limited to bringing the reader to sobriety, I would rather suggest the reader familiarise themselves with Jean-Yves Girard’s The Blind Spot to cover more topics regarding the general underlying premises.

Girard coined concepts such as geometry of interaction, phase semantics, coherence spaces and proof nets to generalise constructive proofs and provide a geometric perspective for them. However, I focus on categorical semantics to refine the Brouwer-Heyting-Kolmogorov fashion of thought. Previously, I had discussed Cartesian closed categories and how constructive proofs are interpreted in them. Now we need a naturally occurring counterpart of them to equip resource-aware proofs with analogous semantics agreed with the computational content coming from lambda terms. Let me have a look at the following provability in intuitionistic logic once more. In A₁,…,Aₙ ⊢ A, we have interpreted the left-hand side A₁,…,Aₙ as the Cartesian product A₁ × … × Aₙ, whereas the Cartesian product operation admits arrows corresponding to the structural rules already declared pathological!

In intuitionistic logic, there is always the copying arrow A → A × A since one can always send a ∈ A to a pair (a,a) ∈ A × A2, so, in terms of proofs, every proof of A can be used as much as one needs without any restriction. There is also the deletion the arrow A → 1, where 1 is a one-element object (a terminal object, in category-theoretic terms) since one can send any a ∈ A to • ∈ 1. Logically, it means that we have globally accessible structural rules, that is, inference rules manipulate the structure of a logical inference. The arrow A → A × A corresponds to the contraction rule, saying that if we used a particular premise twice, then we can apply it once. Or, if we derived Γ, A, A ⊢ B, then one can also derive Γ, A ⊢ B, where Γ is a finite set of other premises used to infer B. An arrow A → 1 reflects the weakening rule saying that we can extend our premises anyhow we want, i.e. if we deduced Γ ⊢ B, then we also infer Γ, A ⊢ B for any A. However, in linear logic, neither of those structural rules is afforded at all. The conclusion is that the concept of finite product is yet to be generalised.

Finite products are not the only way of combining structures since it is not natural for vector spaces, algebras and modules, which are combined with the tensor product operation, widely known in many areas of mathematics and physics. Monoidal categories are a unifying concept that allows one to think about algebraic structures such as groups and monoids and the aforementioned tensor product using a single vocabulary. A category is called monoidal if for any two objects A and B, their tensor product A ⊗ B is defined, and the tensor product should satisfy several conditions, such as the MacLane pentagon, that I omit. The name ‘linear logic’ comes from the resemblances between formal properties of linear logic semantics in monoidal categories, in particular, the category of coherence spaces and vector spaces, the first example of the notion of a monoidal category.

Now we can interpret proofs without admitting pathological principles from a resource-aware angle, since neither A → A ⊗ A nor A → 𝟙 is admissible; 𝟙 also satisfies weaker postulates than terminal objects in Cartesian closed categories. Now a sequent A₁,…,Aₙ ⊢ A is interpreted as A₁ ⊗ ... ⊗ Aₙ → A that requires a different informal intuition as well. Now we would rather interpret ‘we can perform an action A by consuming A₁ ⊗ ... ⊗ Aₙ’, and we model the process of input data consumption with the tensor product in monoidal categories. As far as we think of assumptions as resources, then we must consume every particular premise exactly once. This perspective connectes linear logic with resource-sensitive computation, the mathematical foundations of quantum physics, linguistics and other topics, in which tracking the usage is substantial. Moreover, we can also transfer the Curry-Howard correspondence to linear logic, so we have the computational interpretation that has influenced such areas as reactive programming, quantum computation and linear type theory. Let me also note that such connectives as implication and conjunction should also be resource-aware. (Multiplicative) Conjunction in linear logic is now understood in terms of ⊗. So ‘A and B’, denoted as A ⊗ B is now read as ‘you can consume both A and B simultaneously’ or ‘there is an affordance to perform A and B in parallel’: note that such an interpretation generally does not allow decomposing A ⊗ B into its constituents A and B unlike the finite product A × B.

The implication connective is also modified: in classical and constructive logic, we have the principle A ∧ (A → B) → B, that is, ‘a statement B holds whenever A holds and A implies B. That ∧ is the Cartesian product category-theoretically, so the concept of implication requires the corresponding generalisation as well. We read the linear implication A ⊸ B as ‘we can perform an action B consuming A once’ and it is equipped with the corresponding arrow A ⊗ (A ⊸ B) → B. In category-theoretic terms, ⊸ corresponds to the concept of internal hom that abstracts over the concepts of spaces of linear maps in the categories of vector spaces or the Abelian group of homomorphisms in the category of Abelian groups.

The language of linear logic also allows one to locally ease the restrictions on the resource use via the exponential operator (or modality) !A3. We interpret formulas modalised with ! as ‘an action A can be performed finitely many times’. From a logical point of view, the ! makes intuitionistic logic a subsystem of linear logic. The key observation is that we can express the constructive implication A → B as !A ⊸ B, whereas the constructive conjunction A × B is expressed as !(A ⊗ B). From a category-theoretic point of view, ! is an operator on linear types, taking a type A and returning !A equipped with the copying and deletion arrows !A → !A ⊗ !A and !A → 𝟙 (that is, a comonoid object) that we generally lack. Computationally, ! provides a flexible mechanism to call a particular procedure or a variable as many times as you require within the body of a resource-sensitive action.

On the other hand, !A might give you too much freedom, so we would not like to overlook intermediate restrictions on resource consumption. !A allows performing an action A any finite number of times, whereas, by default, we can call any action exactly once. Combinatorially, we extract two more modes of resource policy. An action is called relevant if it should be used at least once. In other words, we are allowed to multiply them, but deletion is not afforded. But there are also affine actions that one can use at most once; thus, we can delete them without being able to multiply n times for n > 1. The example with £7 is affine since there is an option not to spend £7: if it were linear, you would have an obligation to spend £7 on a pint of ale, but people are not generally forced to spend money on alcohol.

One can capture all those resource policies within a single formalism by using the concept of a subexponential. Previously, we had !A with the copying and deletion operations, i.e., !A → !A ⊗ !A and !A → 𝟙 since the operator ! allows one to perform an action A as many times as we want, but now we introduce more operators with weaker abilities. Now we have !ₐ A meaning that an action A can be used at most once, that is, we can discard it without repetitions in the future (the subscript ₐ stands for affine). Category-theoretically, we are provided with !ₐA → 𝟙, whereas the affordance!ₐA → !ₐA ⊗ !ₐA is not allowed. We also extend our language with the modality !ᵣA (ᵣ for relevant) meaning that an action A should be performed at least once, that is, we must use A in our further procedures a non-zero number of times, but we cannot proceed ignoring A, so we are given !ᵣA → !ᵣA ⊗ !ᵣA, but !ᵣA → 𝟙 remains inaccessible. A subexponential is a modal operator suggesting fewer abilities on resource consumption compared to the exponential operator !A, which explains its name, sub-exponential. In the paper, I suggest a type-theoretic approach to designing such systems with multiple resource usage policies and establish the standard proof-theoretic properties that the computation process in the system always terminates with a unique result. This property is, of course, not transferable to general-purpose programming languages for well-known reasons, but demonstrates that canonically built procedures combining several resource policies within their bodies are not deviant in terms of computational behaviour.

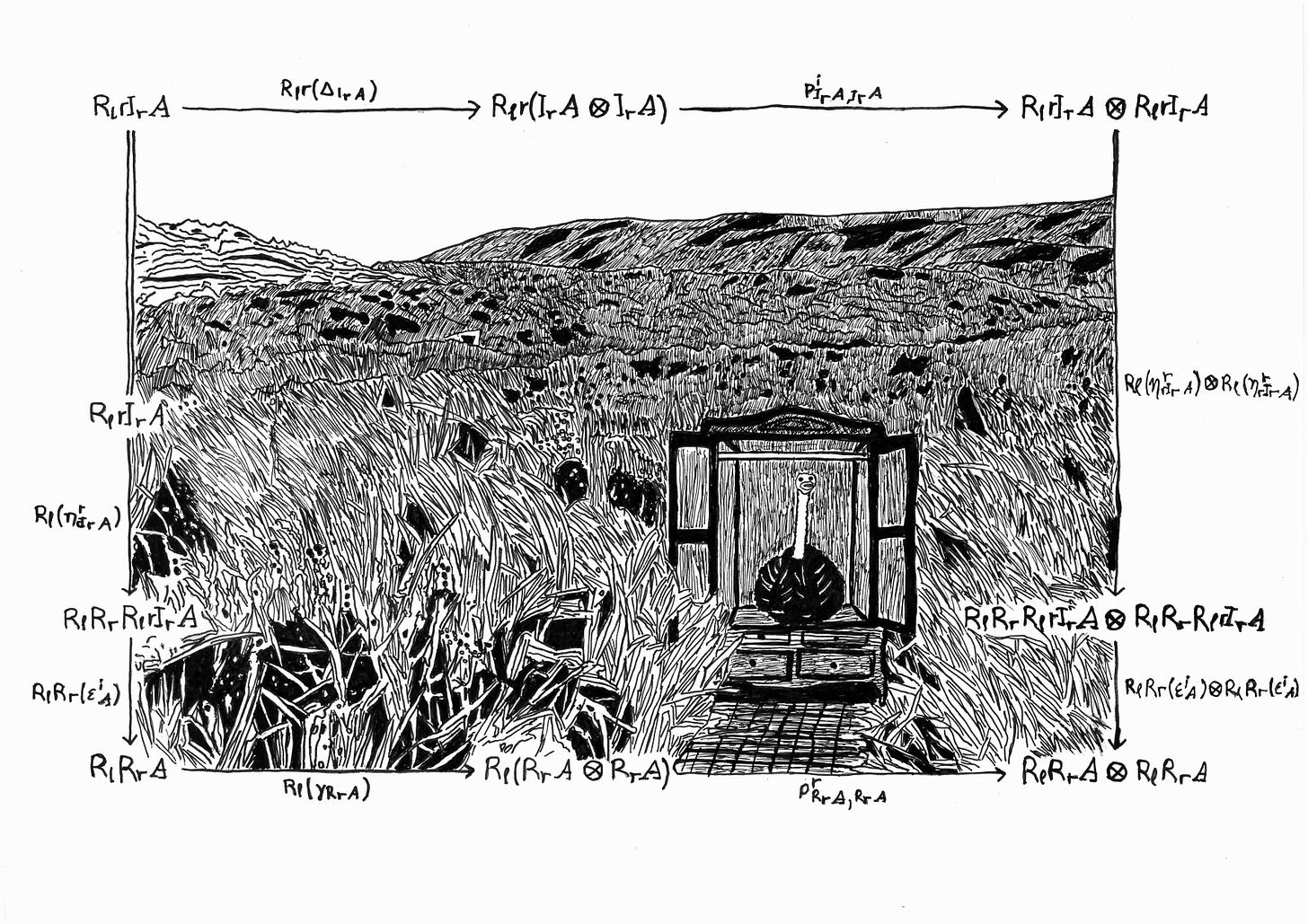

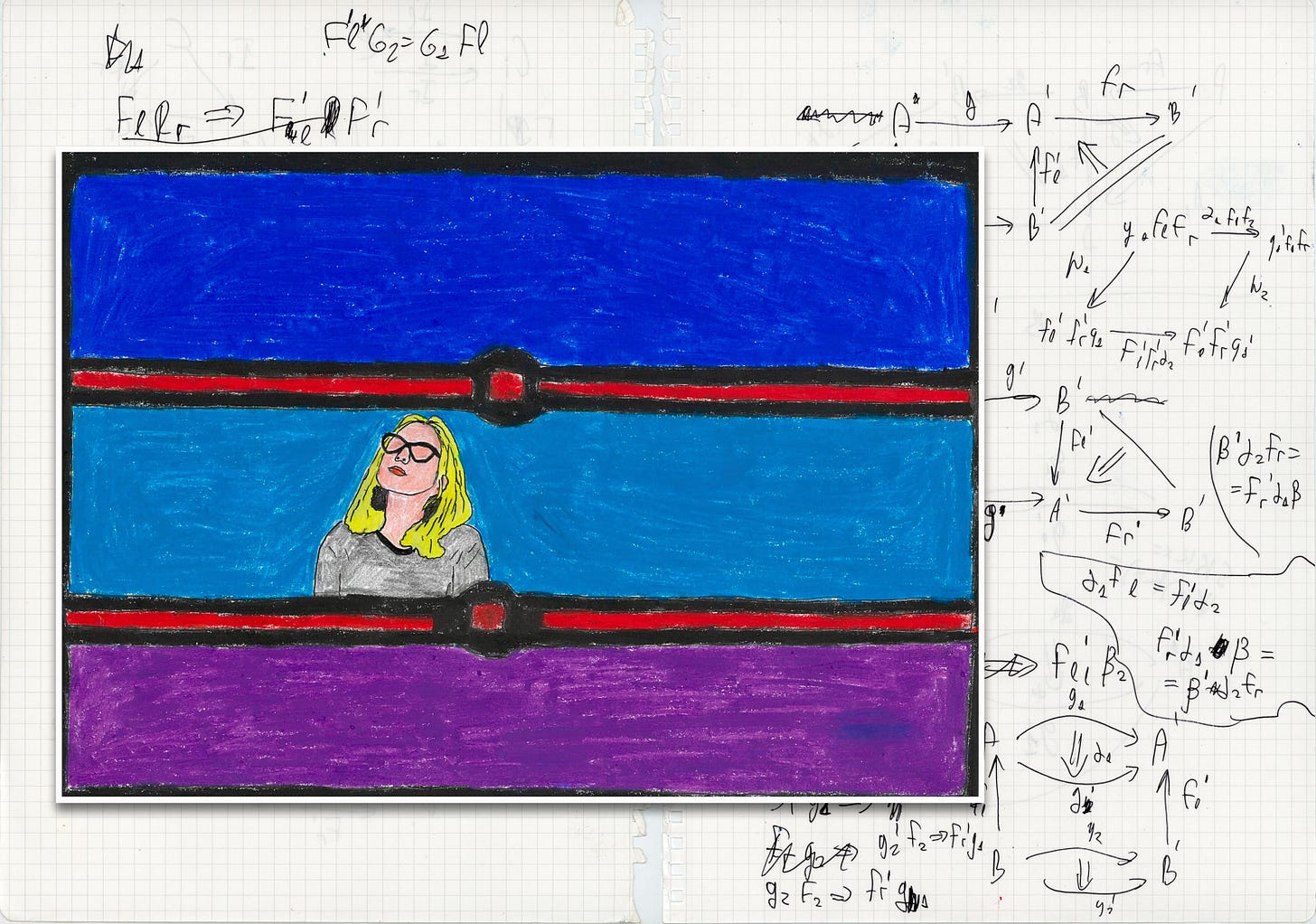

Regarding categorical semantics, there is a landscape of category-theoretic frameworks for the semantic analysis of linear logic. I recommend the reader to familiarise themselves with Paul-André Melliès’s Categorical Semantics of Linear Logics and Pierre-Louis Curien’s Introduction to Linear Logic and Ludics for a more systematic background on the variety of those approaches. Let me just recall that a symmetric monoidal category with an exponential comonad is called a linear category, so the idea is to equip linear categories with two extra comonads reflecting affine and relevant actions within the same monoidal category. I coined the term ‘Cocteau category’ not because I believe that Jean Cocteau had a prominent contribution to category theory. But ‘Cocteau category’ is a more laconic expression instead of accurate, but cumbersome phrases such as ‘the enrichment of a linear category with two additional symmetric lax monoidal comonads and symmetric lax monoidal monad transformations between them’. Jean Cocteau is known for his Orphic trilogy on poetry and death, consisting of the films Le sang d'un poète, Orphée and Le testament d'Orphée, so the choice of the name praises Cocteau’s trilogy and, thus, emphasises the ‘trinitary’ nature of manipulating premises in a resource-aware way. In the paper, I gave a generalisation of the concept of a Cocteau category with so-called assemblages4, that is, symmetric monoidal categories equipped with a family of comonads. Using this generalisation, one can show that the linear type theory with subexponentials is semantically adequate with respect to Cocteau categories as well. But the adequateness holds for the whole class of typed linear lambda calculi with subexponentials with respect to the corresponding assemblages.

§4 Knowing How and Substructural Type Theory

I have been discussing concepts from metamathematics that I find relevant for discussing the foundations of knowledge representation. Now let me focus on the aspects of epistemology and ontology to complete the exposition. Back in the 1950s, Gilbert Ryle, an Oxford philosopher, studied the distinction between knowing how and knowing what in his monograph The Concept of Mind. Let me illustrate the difference between those two knowings by examples. You could know how to play snooker, and this knowledge might contain skills you do not verbalise. For example, if you are an ace snookerist, you might be able to describe verbally how you cut a ball step by step. But most people would rather express their knowledge by performing the corresponding action without necessarily being able to explain it in precise terms. This kind of knowledge is often classified as procedural. On the other hand, you could know facts, data, theories and their implications and express them as some propositions. This ‘knowing’ is called knowing what, or propositional (or conceptual) knowledge. For example, one might know what Peano arithmetic and its axioms are or that Pont Neuf is a bridge across the River Seine in Paris, as explicitly verbalised facts.

The aspects of both kinds of knowledge have quite a few subtle nuances. I just note that before Ryle, epistemologists had generally maintained the intellectualist position, according to which procedural knowledge is a manifestation of conceptual knowledge. Ryle in The Concept of Mind suggested arguments in favour of the irreducibility of knowing how in terms of propositional knowledge, but I refer the reader to the book itself. There are different schools of thought on whether such a distinction is applicable indeed. I think of knowing how as a complex of means that makes a particular subject able to perform this or that action. Such complexes could be composed of bits of propositional knowledge as well as unverbalised skills. I think of knowing what and knowing how as densely interconnected.

Knowledge of anything, first of all, assumes that someone is aware of a certain state of affairs, so developing a language for describing knowledge relies on the assumption that states of affairs do have some representation accessible to the perception. In other words, I cannot suggest a language for knowledge representation without answering the question ‘what ontology is’ or ‘what an ontology is’. Ontology has existed approximately as long as philosophy has, so the reader should not expect that I am going to give a comprehensive guide to ontology by the end of the discussion.

In philosophy, ontology tackles the question of what being is. Ontology, as an area of philosophy, has its origins in Ancient Greece, and Parmenides was the very first ontologist. Some 2,500 years later, French philosopher Alain Badiou, in Being and Event and other works, puts forward a thesis that ‘ontology is mathematics’, i.e., the language of Zermelo-Frankel (ZFC) set theory and its axioms is a sufficient playground for tackling the most general ontological questions in the following sense:

The thesis [ontology is mathematics] that I support does not in any way declare that being is mathematical, which is to say composed of mathematical objectivities. It is not a thesis about the world but about discourse. It affirms that mathematics, throughout the entirety of its historical becoming, pronounces what is expressible of being qua being.

Badiou also developed an original approach to interpreting entities in themselves as multiplicities, which are exactly sets in set theory. Relations between multiplicities are described with the membership relation ∈, as it is given by the ZFC axioms. Generally, I agree with Badiou that ‘being is not composed of objects, of small unities, being is composed of relations and movements of these relations and, finally, being is composed of relations between relations’, but there are several nuances which prevent me from becoming a complete disciple of Badiou. Badiou himself analyses the concept of multiplicity as one of the central concepts in his ontology and thus takes ZFC as the most appropriate formal description of multiplicities and relations between them. Taking ZFC as such a playground is problematic in many aspects. First of all, one can vary the choice of axioms and thus have non-equivalent presentations of multiplicities. For instance, one can replace the axiom of choice with the axiom of determinacy, which makes one of the versions of the Continuum Hypothesis provable5. However, the axiom of determinacy is inconsistent with the axiom of choice. Accepting or rejecting the axiom of choice also has quite a few implications for affordances. Thus, all those axiomatic nuances make many of the statements on how being is arranged contingent.

Furthermore, set theory and category theory maintain separate non-equivalent ontological perspectives, making the lens of category theory more profound. Category theory abstracts over the notion of a structure and considers the notion of a morphism as the primitive one, which moves the vision of ontology towards a dynamic picture rooted in transformations, procedures and processes, distancing from statically fixed structures. In set theory, the counterpart of morphisms is the set-theoretic definition of a function, reducing it to the set of tuples forming the graph of the corresponding function. This setting does not quite reflect the intuition of a function as a transformation or a procedure, but presents it as a static snapshot.

First of all, one can vary the choice of axioms and thus have non-equivalent presentations of multiplicities. For instance, one can replace the axiom of choice with the axiom of determinacy, which makes one of the versions of the Continuum Hypothesis provable. However, the axiom of determinacy is inconsistent with the axiom of choice. Accepting or rejecting the axiom of choice also has quite a few implications for our affordances. Thus, all those axiomatic nuances make many of our statements on how being is arranged contingent. Besides, set theory and category theory maintain separate non-equivalent ontological perspectives, making the lens of category theory more profound for us. Category theory abstracts over the notion of a structure and considers the notion of a morphism as the primitive one, which moves the vision of ontology towards a dynamic picture rooted in transformations, procedures and processes, distancing from statically fixed structures. In set theory, the counterpart of morphisms is the set-theoretic definition of a function, reducing it to the set of tuples forming the graph of the corresponding function. This setting does not quite reflect the intuition of a function as a transformation or a procedure, but presents it as a static snapshot.

It is thus worth shifting from a set-theoretic paradigm towards category theory for a more robust analysis of relations between relations. Nevertheless, I believe that set-theoretic centrism in the fashion of Badiou cannot be avoided completely. Category-theoretic entities in themselves are not expressed in the vacuum space. One often adjusts our set-theoretic foundations to legalise 'too large' objects. For example, Jacob Lurie’s Higher Topos Theory has a separate paragraph on Grothendieck universes that considerably enriches the power of ZFC, making this set theory expressible enough to equip infinity-categories with some ontological status. In metamathematics, we accept that we have a tower of theories where each succeeding theory makes its predecessors admissible, so no ultimate metamathematical theory exists. We have a sort of metamathematical contingency since our affordances are a matter of the choice of the foundations of mathematics. Such contingency might imply ontological pluralism if we identify the general principle of ontology with the axioms of a formal theory that we consider the foundations of mathematics. So the choice is to take category theory as a plausible depiction of ontology for modelling procedural knowledge, where actions are understood in terms of morphisms.

Thus, I would clarify the notion that being is composed of higher-order relations that are presumably presented by category-theoretic means. Now let me turn back to our starting point. Whenever one thinks of knowledge as awareness of a particular state of affairs or as an ability to perform an action and solve a task, it is also worth elaborating on knowing as the interaction between subjectness and ontology. Thus, one can refine our understanding of knowledge as an ability to represent particular relations by using currently available affordances. This refinement roughly approximates Ryle’s concept of knowing what, but without addressing knowing how, one would leave a conscious subject passive and, thus, deprive them of any subjectness at all.

Recall that I have already suggested thinking of procedural knowledge as the ability to solve a particular task. Let me consider a simple example. Assume one has a statement ‘I know that the totality of the Ackermann function is provable in Peano arithmetic’. The Rylean perspective would categorise this statement as an expression of propositional knowledge, claiming that some person is aware that the corresponding theorem is provable in Peano arithmetic. But one can interpret such a statement as an actual ability to provide a proof from the Peano axioms that the Ackermann function is total. The moral is that procedural knowledge requires functional interpretation in terms of generating actions by consuming input data.

So the necessity of modelling knowledge functionally, along with the aforementioned category-theoretic perspective on the dynamic nature of ontology, led to an assumption that the concept of an internal language has potential in this direction. First of all, a (hypothetical) project of ontology completely based on the formalism of category theory affords too much. For instance, categories in general can have any cardinality provided that one has enough postulates in our set theory. This approach would be too transfinite for us, which would not be too problematic if I sought to develop some alternative approach to ontology inspired by Badiou. But I rather need the representation of ontology that would be inclusive for a subject synthesising actions by perceiving already existing relations between entities described by morphisms.

A knowing subject is limited to their lifespan; one does not expect them to be immortal, so structures of arbitrary cardinality are generally out of a subject's perception unless they are provided with an observable description. The problem is that the criteria of being observable are rather obscure without further concretisation. As a convention, I would agree that a morphism is observable (or achievable) if it is equipped with a computational procedure performing an action corresponding to a morphism. One expects all morphisms to deliver the corresponding output (if it exists) by finite means. As I define the field of ontology as the category-theoretic presentation of relations and relations between relations, a computationally presented ontology is an ontology composed of achievable relations, that is, morphisms whose presentation is accessible by finite means. Therefore, an ability to solve a task or perform an action is a synthesis of new relations (aka morphisms) from already presented ones. I think of this formula as a metapostulate guiding one in achieving an interpretable system of procedural knowledge representation, with the ideas from our paper on linear logic and Cocteau categories as a candidate potentially providing a more rigorous basis for this principle.

The Curry-Howard correspondence for constructive logic allows operationalising mathematics understood in terms of propositional knowledge as we equip statements with the procedure realising them. But verbalised facts do not expire (at least not as fast as resources), and they are mostly reusable, so I conjecture that knowing how is knowledge whose premises are statically presented data. As Albert Dragalin, a Soviet-Hungarian proof theorist, noted in Mathematical Intuitionism: Introduction to Proof Theory:

In intuitionistic mathematics, just as in classical mathematics, we use certain idealisations. We assume a preservation principle, consisting in the fact that, if the truth of some proposition has been discovered, then it also remains true in the future. We also assume the principle of potential realisability consisting in the fact that a researcher can disregard the limitations in [their] resources of space and time.

Therefore, developing the mathematical foundations of propositional knowledge representation could be sought in constructive mathematics, but this is my assumption, requiring a deeper study. The Curry-Howard correspondence for linear logic plausibly describes computational actions relying on resource consumption, so I suggest considering the subexponential type theory, combining all the resource policies within a single system, as the alphabet of lingua franca for procedural knowledge representation.

The aim is to formulate the affordances and solutions with linear terms, which are well-typed in subexponential type theory, to have a more flexible mechanism of resource management. Categorical semantics plays the role of denotational semantics for such procedures, but, philosophically, categorical semantics represents an ontology composed of relations presented as morphisms. Thus, any syntactic well-typed procedure is reflected as a relation in an ontology, aka a morphism in a Cocteau category. I do not claim peremptorily that Leibniz’s programme is completely fulfilled, and the subexponential type theory is the ultimate characteristica universalis for procedural knowledge, as one still remains in the dark in too many aspects to achieve such a goal.

Instead of a Conclusion

Regardless of whether the formal system I presented fits as the ‘axiomatisation’ of procedural knowledge, I pose the question of what alternative trajectory I would suggest compared to today’s modus operandi, which is common in the industry. Knowledge representation is generally considered part of the contemporary artificial intelligence industry. As a result, methods based on neural networks and statistical approximations gradually absorb the problematic field of knowledge representation. It is not sufficient to develop the formalism based on linear logic and category theory to reach a more plausible modelling of knowledge by computational means and convince everybody to switch to monoidal categories. Even more, developing formalisms with practically working examples does not suffice to achieve the diversification of techniques in knowledge representation. Value dispositions in the industry should be critically scrutinised.

Many visionaries, investors and top-level managers are led by a rather simplistic system of values, being an amalgamation of libertarianism à la Ayn Rand, uncritical belief in progress and growth at any cost, and the cult of technology. Consider The Techno-Optimist Manifesto by billionaire venture capitalist and self-described “tech bro” Marc Andreessen as an example. Andreessen's manifesto reflects well the axiological6 principles that guide current tendencies in technology development. The manifesto (along with other outstanding pieces of thought, such as Why AI will save the world) presents the rapid tempo of technological progress as a self-contained value and the universal solution to the most crucial problems. Moreover, the manifesto declares those who propose some reflection beyond progress to be ‘enemies’. Market fundamentalism plays the role of an institution, forcing researchers and engineers’ ideas and inquiries to be market-fit and stigmatising the concepts beyond the needs of the marketplace as a sort of gnostical turpitude7. I believe that this system of beliefs is the axiological foundation of the industry's endeavours to subjugate all the segments of our life to neural networks and large language models.

I referred to the aforementioned socio-economic trends since I aim to revise today’s modus operandi in knowledge representation, viewed as a branch of mainstream artificial intelligence. It might not suffice to suggest a category-theoretic perspective on ontology along with a syntactic description with a variant of linear logic. Modus operandi is not only composed of well-working ideas coming from mathematics or science, but also the inertia of long-standing dispositions. I can summarise it with the following formula: consumerism implies the imitation of knowledge based on reductionism. Moreover, I tend to think that today’s development of artificial intelligence is the most demonstrative instantiation of such a formula. But let us first elaborate on the components of the formula: consumerism and the reductionist imitation of knowledge.

The concept of consumerism, thoroughly analysed by authors such as Jean Baudrillard and Zygmunt Bauman, has an uncountable number of dimensions. I focus on the consumerist treatment of ideas and knowledge. Briefly, one can characterise this disposition as ‘an idea X is valuable if it demonstrates a mid-term potential in solving a concrete practical problem‘. Generally, maintaining this disposition would not be so problematic if it were used just for solving practical problems. The absolutisation of this disposition is the instance of consumerism about the knowledge of ideas. For example, the neural network-based approach to natural language processing has the potential to leave linguistics and linguists behind in the development of language translation services. I suggest the reader have a look at this blog post8 describing ‘how linguists have lost the final battle’ at ABBYY since its management decided to dismiss researchers in linguistics to proceed with the machine learning approach. One can argue that this tendency does not devalue linguistics as a scientific discipline. This is the case indeed, but consumerism as a value preset is able to deter people from their involvement in research in linguistics, so the risk of erosion should not be overlooked, even though it may not be self-evident at this particular point in time.

This tendency does not exist in the vacuum: unifying all the entities of languages as vectors might demonstrate computational efficiency at the cost of flattening the picture of the nature of language and making it oversimplified (everything is a vector in the n-dimensional space) for the sake of more effective performance. As Baudrillard noted in The Consumer Society in more generic terms:

We are here at the heart of consumption as total organisation of everyday life, total homogenisation, where everything is taken over and superseded in the ease and translucidity of an abstract 'happiness'9, defined solely by the resolution of tensions10… All activities, labour, conflicts and seasons have been united and abolished in the same abstraction. The substance of life unified in this way, in this universal digest, can no longer have in it any meaning: what constituted the dreamwork, the labour of poetry and of meaning – in other words, the grand schemata of displacement and condensation, the great figures of metaphor and contradiction, which are based on the living interconnection of distinct elements – is no longer possible. The eternal substitution of homogeneous elements now reigns unchallenged. There is no longer any symbolic function, but merely an eternal combinatory of ‘ambience’ in a perpetual springtime.

Reductionism, in turn, is a philosophical disposition expressed by many authors in a variety of contexts. One can characterise reductionism as a belief that a particular phenomenon is completely explainable (or reducible) in terms of simpler constituents. The examples of reductionist theories are Marxism and psychoanalysis. Marxism in its orthodox form reduces the historical process to economic tensions, whereas Freudian psychoanalysis interprets the manifestations of a person’s mind as composed of subconscious sexual motives. Representing complex phenomena with simpler blocks is a well-working method in many aspects of cognition whenever a plausible approximation is sufficient instead of providing a thorough explanation. Building such representations does not make their creators automatically reductionists. However, identifying such representations of phenomena and their interpretation leads to the contemporary form of reductionism, when ideas and theories are evaluated by their ability to deliver an efficient solution only. I believe treating ideas, theories, and disciplines in such a way shapes the consumerist instance of reductionism, so today’s development in the mainstream artificial intelligence can be considered a manifestation of this trend.

Achieving higher levels of interpretability in, say, knowledge representation, is likely to require studying the mathematical foundations of computation and diving into more underlying aspects of what we mean by knowledge and how we distinguish knowledge from other forms of beliefs. I expect that interpretability is barely compatible with the reductionist modus operandi as a manifestation of consumerism. The approximation of concepts and relations between them should take a narrower place as a pragmatically convenient instrument of prototyping, but mixing such approximations with an interpretation is what I call the imitation of knowledge, as they are presented as data sets of statistical correlations.

The alternative way to achieve a ‘better working’ modus operandi cannot be just reduced to suggesting other mathematical means, as I have said above, but it requires overcoming the axiological basis or, at least, critical reflection on it. Overcoming reductionism, as I suppose, does not seem impossible without distancing from the consumerist valuation of ideas and problems. Explanations along with interpretable solutions lie beyond statistical approximations based on frequently reproduced correlations. Knowledge representation should depict the interconnections between a subject and the external states of affairs. Thus, achieving interpretability is a matter of multidisciplinary research in epistemology, ontology and logic as fields with a self-contained value, so I have tried to outline the first step towards this direction.

References

The references below are not a bibliographic list of sources, but a list for further acknowledgement, describing the reader to the multidisciplinary field that inspired my style of thought.

Alain Badiou. Being and Event, Theoretical Writings: Section I. Ontology is mathematics.

Roland Barthes. Elements of Semiology.

Jean Baudrillard. The Consumer Society.

Samuel Beckett. Waiting for Godot.

Francis Borceux. Handbook of Categorical Algebra.

Jorge Luis Borges. Labyrinths.

Olivia Caramello. Theories, Sites and Toposes.

Jean Cocteau. Le sang d’un poète, Orphée, Le testament d’Orphée.

Pierre-Louis Curien. Introduction to Linear Logic and Ludics.

Gilles Deleuze and Felix Guattari. A Thousand Plateaus.

Albert Dragalin. Mathematical Intuitionistic: Introduction to Proof Theory.

Michael Dummett. Elements of Intuitionism, Truth and Other Enigmas (in particular, The Philosophical Basis of Intuitionistic Logic)

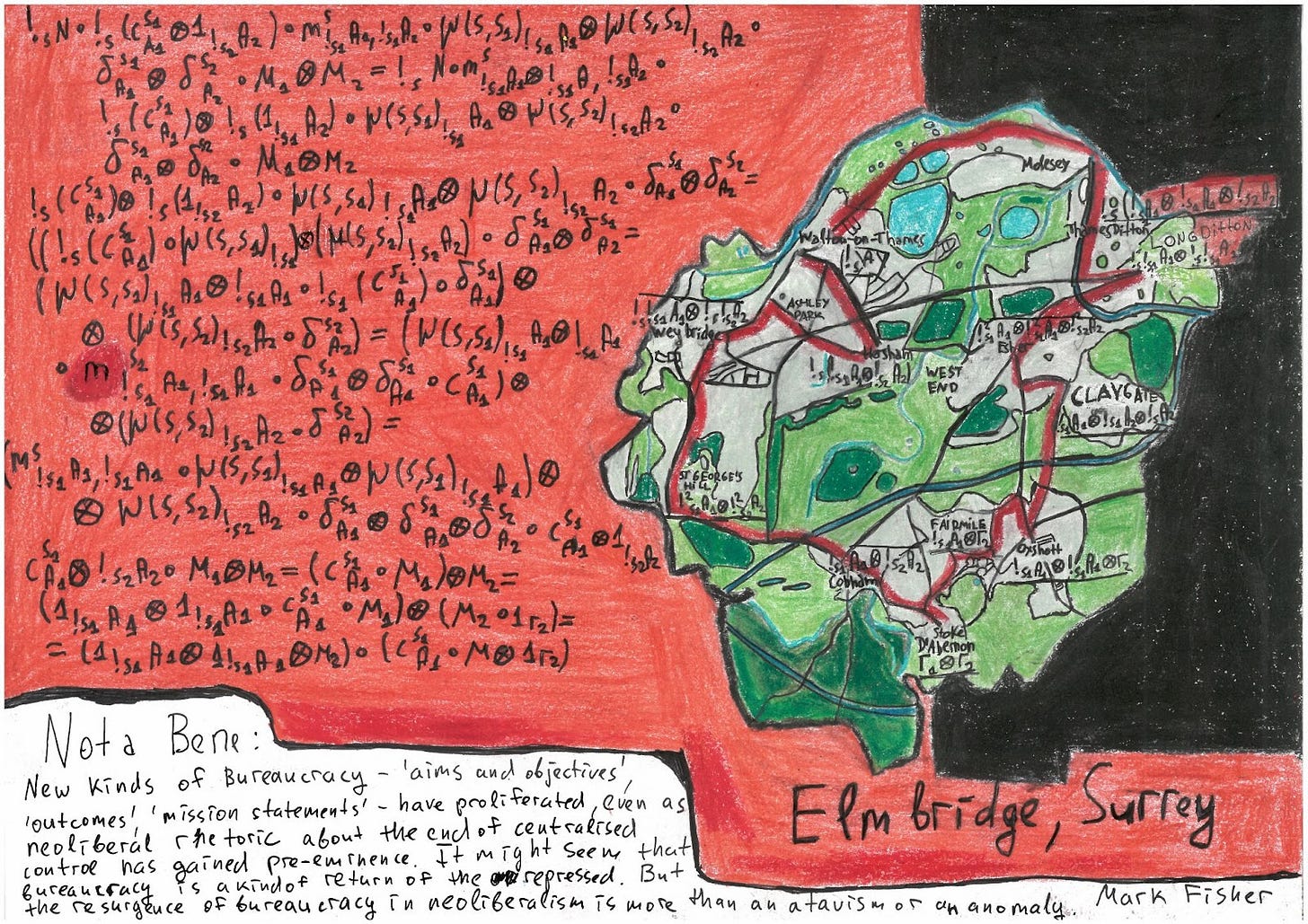

Mark Fisher. Capitalist Realism: Is There No Alternative?

Jean-Yves Girard. Linear Logic, The Blind Spot.

Jean-Luc Godard. Notre Musique, Film Socialisme, Adieu au Langage, Éloge de l'amour.

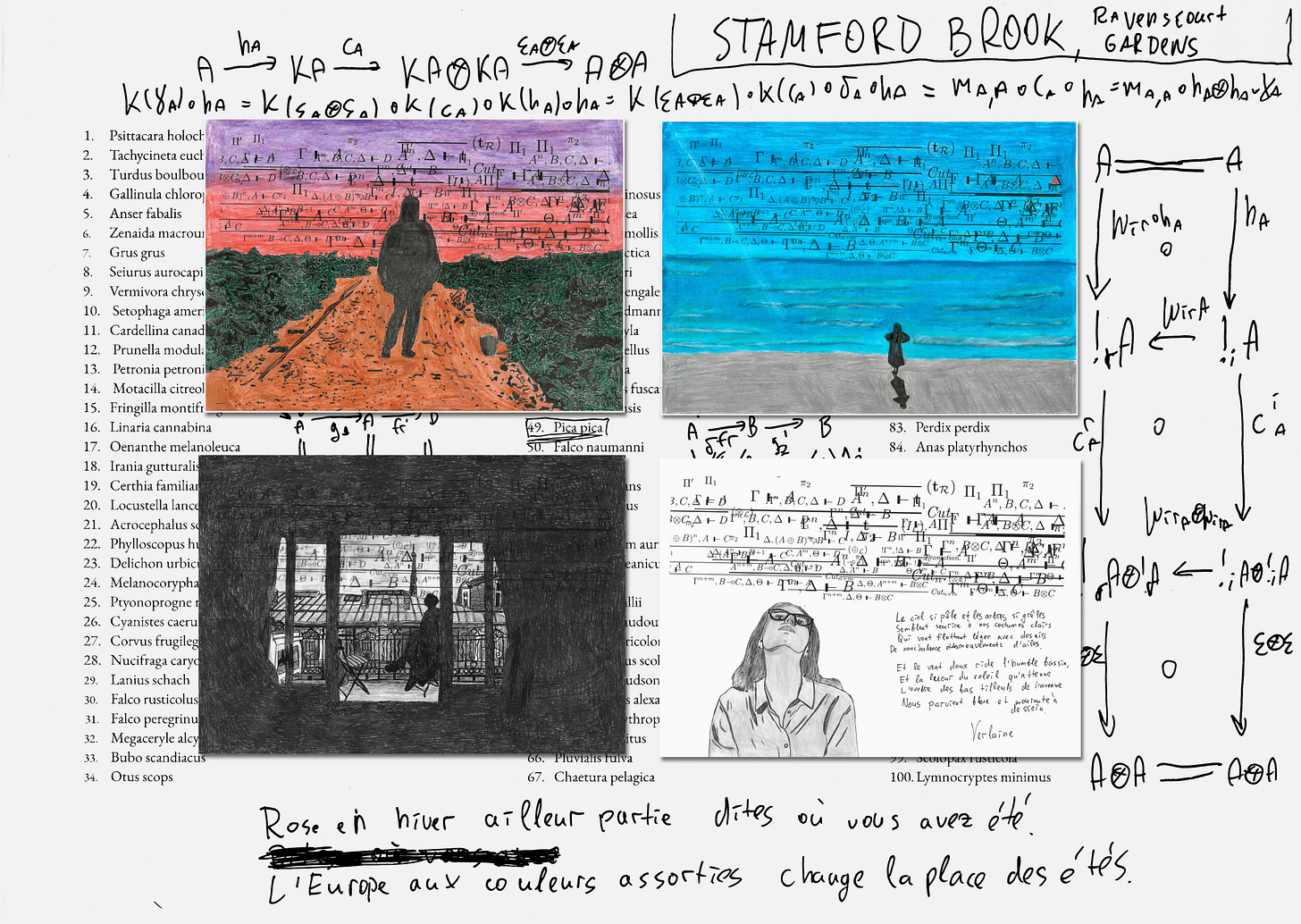

Peter Greenaway. A Walk Through H: The Reincarnation of an Ornithologist, The Falls, Papers.

Immanuel Kant. Critique of Pure Reason.

Joachim Lambek and Philip Scott. Introduction to Higher-Order Categorical Logic.

Jacob Lurie. Higher Topos Theory, Higher Algebra.

Paul-André Melliès. Categorical Semantics of Linear Logic, A Functorial Excursion Between Algebraic Geometry and Linear Logic.

Vladimir Nabokov. Invitation to a Beheading, Pale Fire.

Alain Resnais. L'Année Dernière à Marienbad.

Emily Riehl. Category Theory in Context.

Gilbert Ryle. The Concept of Mind.

Ross Street. The Formal Theory of Monads, Quantum Groups: A Path to Current Algebra.

Anne Troelstra. Lectures on Linear Logic.

Ludwig Wittgenstein. Philosophical Investigations.

Esse est concipi (to be is to be conceived) is a reminiscence of George Berkeley’s formula esse est percipi, i.e., to be is to be perceived.

The set-theoretic notation used in this example is purely illustrational, but generally addressing to the elements of objects in category theory is illegal.

! is called exponential since some of its formal properties resemble exponential maps from the theory of differential manifolds.

The term ‘assemblage’ is inspired by the concept of an assemblage from schizoanalysis by Gilles Deleuze and Felix Guattari.

I mean the form of the Continuum Hypothesis, claiming that there are no intermediate cardinalities between natural and real numbers.

The word ‘axiological‘ is a philosophical term referring to the principles of values.

The phrase ‘gnostical turpitude‘ refers to Vladimir Nabokov’s Invitation to a Beheading. Roughly, ‘gnostical turpitude‘ is Nabokov’s analogue of thoughtcrime.

The original text is written in Russian.

One can easily replace ‘happiness’ with ‘efficiency’.

One can substitute ‘tensions’ with ‘intellectual complexities’.

Kant's views of logic can be compatible with logical pluralism in some form. In your quote Kant describes his very loose concept of *general* logic. Kant's real contribution is his conception of *transcendental* logic, which is the logic of science or, more precisely, logic of mathematical physics à la Newton's Mechanics. Kant could buy the idea that logic of science varies from one scientific discipline to another and that logic can change along with progressive development of science.

We already discussed it elsewhere, but I would comment here once again. a=sqrt(2) and b=log_2(9) are the real numbers, for which the proof of irrationality is a school exercise, and a^b=3 is rational, again a simple constructive derivation. You don't need Liouville numbers or any fancy theory to constructively prove \exists a,b\in R : (a\notin Q and b\notin Q and a^b\in Q) - as stated.

I agree though that the assertion P="sqrt(2)^sqrt(2) is irrational" works as an example. But this is a different assertion. You provided a simple non-constructive proof, and constructive proof involves some theory (which may actually be non-constructive somewhere in its core though, - I am not a specialist in this field to claim there actually exists a constructive proof for P)

Sorry, I am a little bit pedantic about such things. As a former calculus teacher, I don't like it when examples refer to overcomplicated theories rather than clearly explain what they should explain.